- Solan Sync

- Posts

- [2 Business ideas] Top 5 Tools to Run LLM Locally: Enhance Your Privacy & Control

[2 Business ideas] Top 5 Tools to Run LLM Locally: Enhance Your Privacy & Control

Explore the top five tools for running large language models directly on your laptop. Achieve greater privacy, reduced latency, and customizable AI interactions without compromising on efficiency.

Using large language model (LLM)-based chatbots online is easy and just requires an internet connection and a reliable browser.

However, this approach often involves privacy concerns, as companies like OpenAI store your data and metadata to refine their models, which might not sit well with privacy-conscious individuals.

A great workaround is running these models locally, which gives you more control over your data.

This article introduces 5 practical methods to implement LLMs on your own computer.

These methods are straightforward to download, install, and are compatible with most major operating systems.

When you operate LLMs locally, you decide which models to use and can integrate seamlessly with models from the HuggingFace hub. You can also allow these models to access specific project folders, making the bots more responsive and contextually aware in their interactions.

Here is the list of the apps you can use:

1. Ollama

Ollama is ideal for those who prefer a minimalist setup without compromising on the capabilities of LLMs. It supports a variety of models and requires minimal configuration.

Developer and Research Friendly: Allows rapid prototyping and detailed study of LLM behavior.

Pre-built Models: Includes models like Llama 2, Mistral, and Gemma, ready to use post-download.

Efficiency: Optimized to run on NVIDIA GPUs and modern CPUs, ensuring quick and reliable model operations.

2. GPT4All

GPT4All is an open-source initiative that allows you to operate the latest LLMs directly on your local machine. This approach not only bolsters privacy but also enhances response times significantly.

Download and Installation: Fetch the GPT4ALL application from its official site and install it.

Usage: Select the desired model from its interface; if your system has CUDA support, the application will leverage GPU acceleration, boosting performance drastically.

Features: It supports Retrieval-Augmented Generation, enabling the model to provide context-aware responses by accessing multiple local directories.

2. LM Studio

LM Studio serves as a streamlined desktop application designed to manage LLMs locally. Its user-friendly interface and compatibility with models from HuggingFace make it a versatile tool for offline AI experiments.

Functionality: Completely operate offline with full model control.

Model Integration: Easily integrates with models available on HuggingFace, facilitating immediate use.

Advanced Options: Offers GPU offloading, enhancing computational efficiency, though it lacks the capability to process context from project files unlike GPT4All.

4. LLaMA.cpp

GitHub - ggerganov/llama.cpp: LLM inference in C/C++

LLM inference in C/C++. Contribute to ggerganov/llama.cpp development by creating an account on GitHub.github.com

Developed by Georgi Gerganov, LLaMA.cpp translates Meta’s LLaMA architecture into a more accessible C/C++ codebase, making it a robust choice for developers looking to integrate LLM functionality into their applications.

Installation: Use pip to install LLaMA.cpp bindings for Python.

Customization: Offers extensive parameter adjustments to tailor the output according to specific needs.

Performance: Capable of running on both GPUs and CPUs, designed to cater to a variety of operational environments.

5. NVIDIA Chat with RTX

ChatRTX is a cutting-edge application that integrates personal documents and data to provide tailored responses using LLMs, supported by NVIDIA’s powerful RTX GPUs.

Targeted Use: Best suited for those with RTX 30 series GPUs or newer.

Technology: Utilizes TensorRT-LLM and RAG for efficient and context-aware text generation.

Personalization: Learns from user-provided content, offering highly relevant and customized communication.

Using LLMs locally not only secures sensitive data but also provides a customizable, efficient, and responsive setup that cloud solutions often fail to offer.

Whether you are a developer, a researcher, or simply a privacy-conscious individual, these tools provide powerful alternatives to harness the capabilities of LLMs securely within your own infrastructure.

Let’s talk about the Opportunities here.

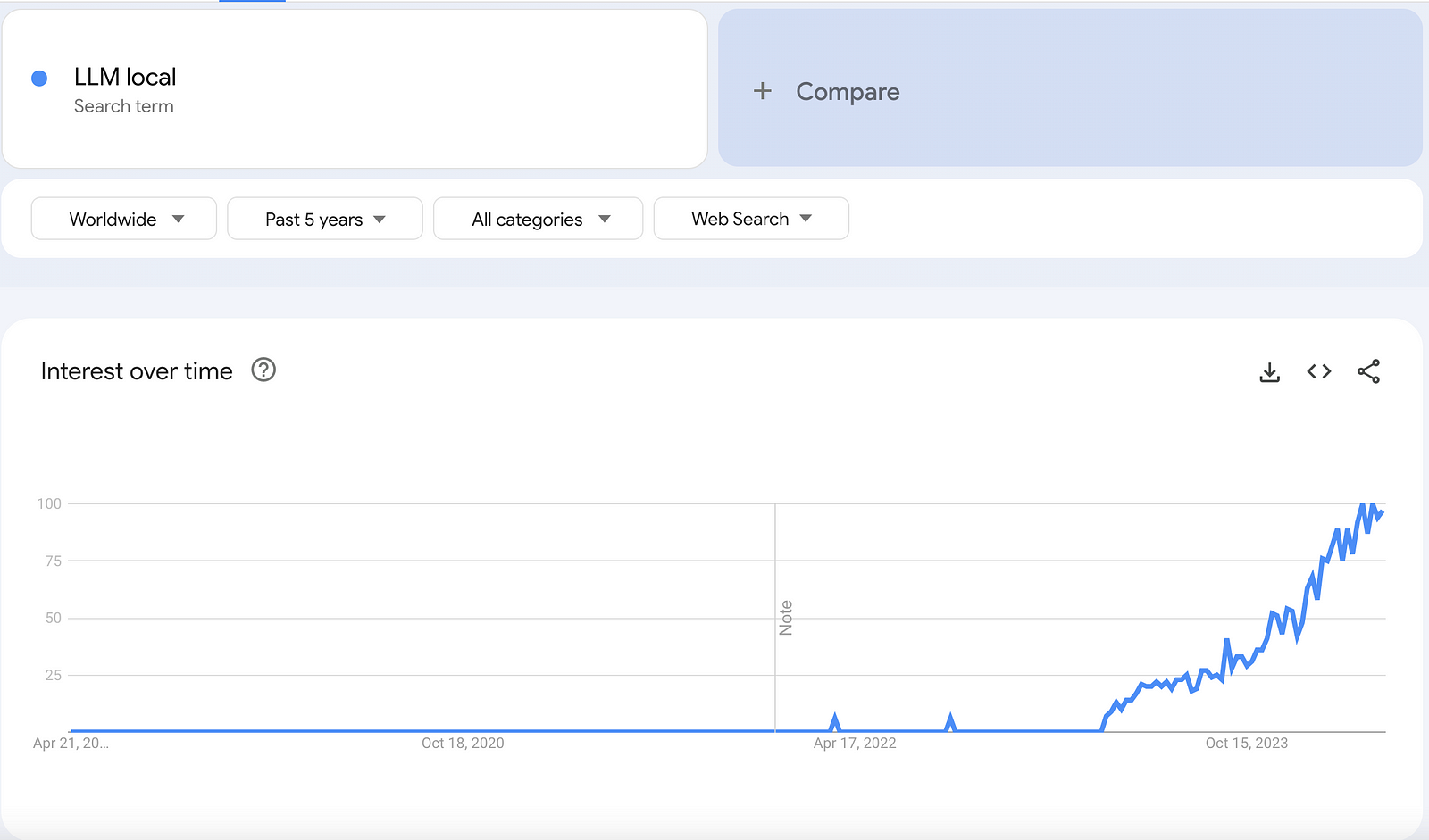

Why Now

The landscape of technology, especially in the realm of artificial intelligence, is rapidly evolving. Large Language Models (LLMs) have demonstrated significant potential in various industries, from customer service and content generation to more complex applications like programming assistance and data analysis.

Running these models locally is increasingly relevant today due to growing concerns about data privacy and the need for bespoke AI solutions that are not reliant on cloud infrastructures. Businesses and individuals are seeking greater control over their data and are wary of continual subscription costs associated with cloud services. This shift presents a timely opportunity for innovative solutions that cater to privacy-conscious users and those needing tailored, efficient AI tools.

The Opportunity

The demand for private, customizable, and efficient AI solutions provides a fertile ground for businesses capable of simplifying the local deployment of LLMs. By capitalizing on this need, a business can offer products that:

Enhance Privacy: By processing data locally, businesses and individuals can avoid the privacy risks associated with cloud-based services.

Reduce Latency: Local processing eliminates dependence on internet connectivity, thereby reducing latency and improving response times for AI-driven applications.

Customizability and Integration: Solutions can be tailored specifically to integrate seamlessly with existing local systems and workflows, providing a significant competitive edge over generic cloud solutions.

Cost-Effectiveness: After the initial setup, local models can be more cost-effective over time compared to subscription-based cloud models, appealing particularly to small to medium-sized enterprises (SMEs).

Business Idea #1: AI Privacy Suite

Concept:

Develop a software suite that enables businesses to run LLMs directly on their local machines securely. This suite would include tools for easy installation, model management, and integration capabilities with existing business systems, focused on ensuring data never leaves the corporate intranet.

Why It’s Attractive:

Market Differentiator: Focuses on privacy and integration, which are quickly becoming priorities for many businesses.

Broad Appeal: Suitable for various industries including legal, healthcare, and finance where privacy is paramount.

Regulatory Compliance: Helps businesses comply with stringent data protection laws like GDPR and HIPAA.

Action Plan:

Develop partnerships with LLM providers like OpenAI and HuggingFace to include their models in our suite.

Create encryption and security protocols that ensure data is processed locally and securely.

Build a simple user interface that allows non-technical users to manage and integrate LLMs with ease.

Launch a pilot program with target industries to gather initial feedback and refine the product.

Validation Points:

Ensure the tool complies with international data protection regulations.

Conduct market research to identify the most demanded features for integration.

Test the software with potential clients to validate its ease of use and performance.

Business Idea #2: Local LLM Deployment Consultancy

Concept:

Offer consultancy services to help businesses implement local LLM solutions tailored to their specific needs. This service includes analysis of the best models for their requirements, setup and integration of these models, and ongoing support and training for their teams.

Why It’s Attractive:

Expertise and Customization: Leverages deep AI expertise to provide customized solutions, not just off-the-shelf products.

Continuous Demand: As AI technologies evolve, businesses will need ongoing advice and updates on best practices and new models.

Scalable Model: Initial consultancy can lead to long-term management contracts.

Action Plan:

Assemble a team of AI experts and software engineers familiar with various LLM platforms.

Develop a comprehensive service package including setup, maintenance, and training modules.

Market the consultancy through webinars and workshops focusing on the benefits of local LLM implementation.

Offer the first consultation for free to new customers to promote trial and adoption.

Validation Points:

Perform competitive analysis to set pricing strategies that reflect the value provided.

Use case studies and testimonials from early customers to demonstrate effectiveness and ROI.

Keep abreast of technological advances to ensure consultancy recommendations remain state-of-the-art.

By tapping into the trend towards local data processing and the continuous advancements in LLM technologies, these business ideas are poised to meet the current and future needs of a diverse clientele looking for efficient, private AI solutions.

Thank you for reading this article so far, you can also access the FREE Top 100 AI Tools List and the AI-Powered Business Ideas Guides on my FREE newsletter.

What Will You Get?

Access to AI-Powered Business Ideas.

Access our News Letters to get help along your journey.

Access to our Upcoming Premium Tools for free.

If you find this helpful, please consider buying me a cup of coffee.

📚 Try Awesome AI Tools & Prompts with the Best Deals

🧰 Find the Best AI Content Creation jobs

⭐️ ChatGPT materials

💡 Bonus

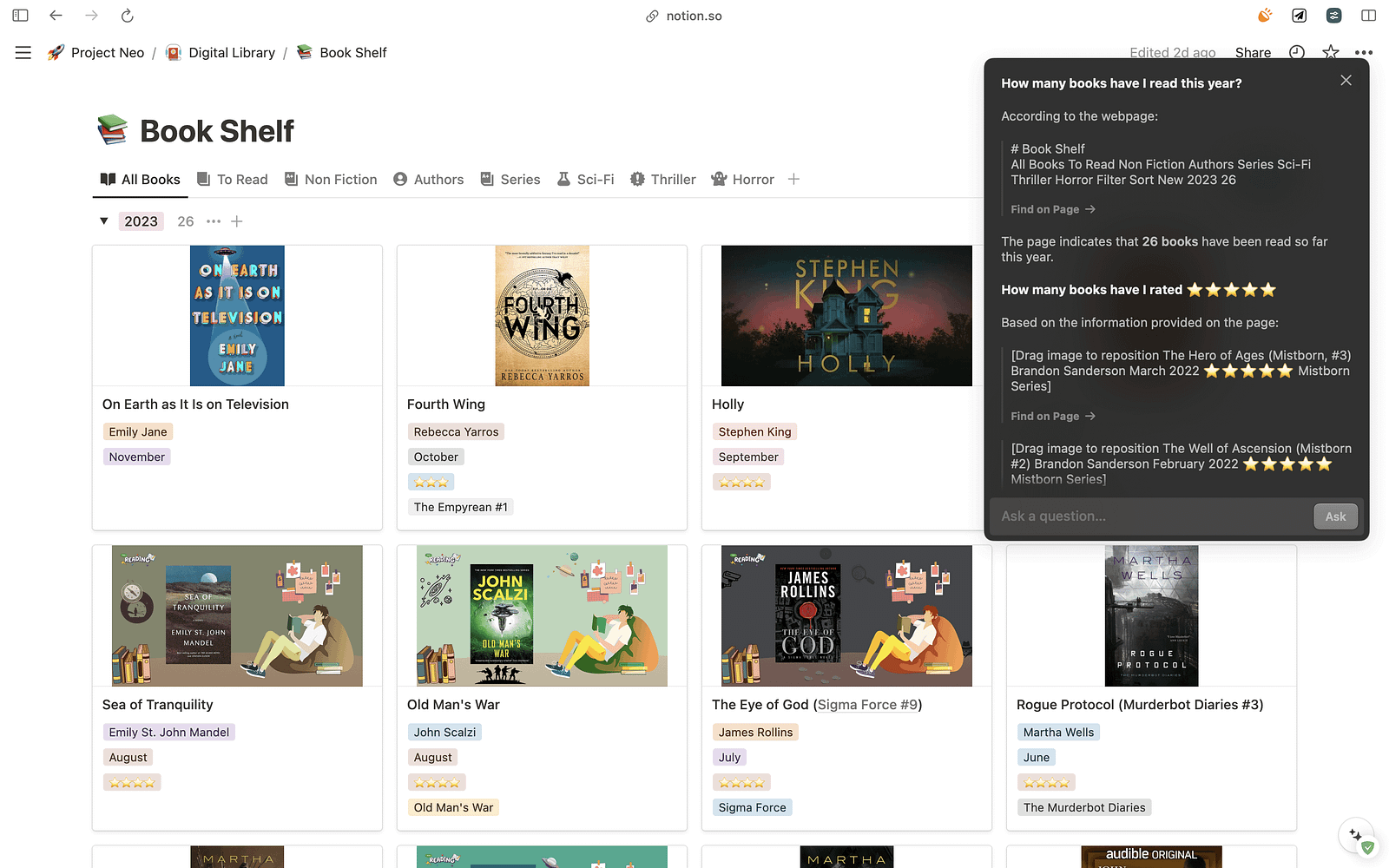

🪄 Notion AI — If you are fan of Notion and solo-entrepreneur, Check this out.

If you’re a fan of notion this new Notion AI feature Q&A will be a total GameChanger for you.

After using notion for 3 years it has practically become my second brain it’s my favorite productivity app.

And I use it for managing almost all aspects of my day but my problem now with having so much stored on ocean is quickly referring back to things.

Let me show you how easy it is to use so you can ask it things like

“What is the status of my partnership” or “How many books have I read this year?” and this is unlike other AI tools because the model truly comprehends your notion workspace.

So if you want to boost your productivity this new year go check out Notion AI and some of the awesome new features Q&A!

Reply