- Solan Sync

- Posts

- Examining the Reality Behind Google Gemini’s Buzz: A Skeptical Viewpoint

Examining the Reality Behind Google Gemini’s Buzz: A Skeptical Viewpoint

Google’s newly revealed Gemini has been causing a stir, rivaling the excitement generated by OpenAI’s ChatGPT launch.

Examining the Reality Behind Google Gemini’s Buzz: A Skeptical Viewpoint

Google’s newly revealed Gemini has been causing a stir, rivaling the excitement generated by OpenAI’s ChatGPT launch.

Gemini stands out in the AI arena with its cutting-edge multimodal capabilities. Unlike traditional models which handle specific tasks in isolation (like text or images), Gemini is designed from scratch to integrate these modalities. This enables it to fluently process and reason across text, images, videos, audio, and even code.

On paper, and in demonstrations, Gemini seems to surpass GPT-4. But there’s more to the story than meets the eye. Despite the buzz, there are reasons to be skeptical about Gemini’s superiority.

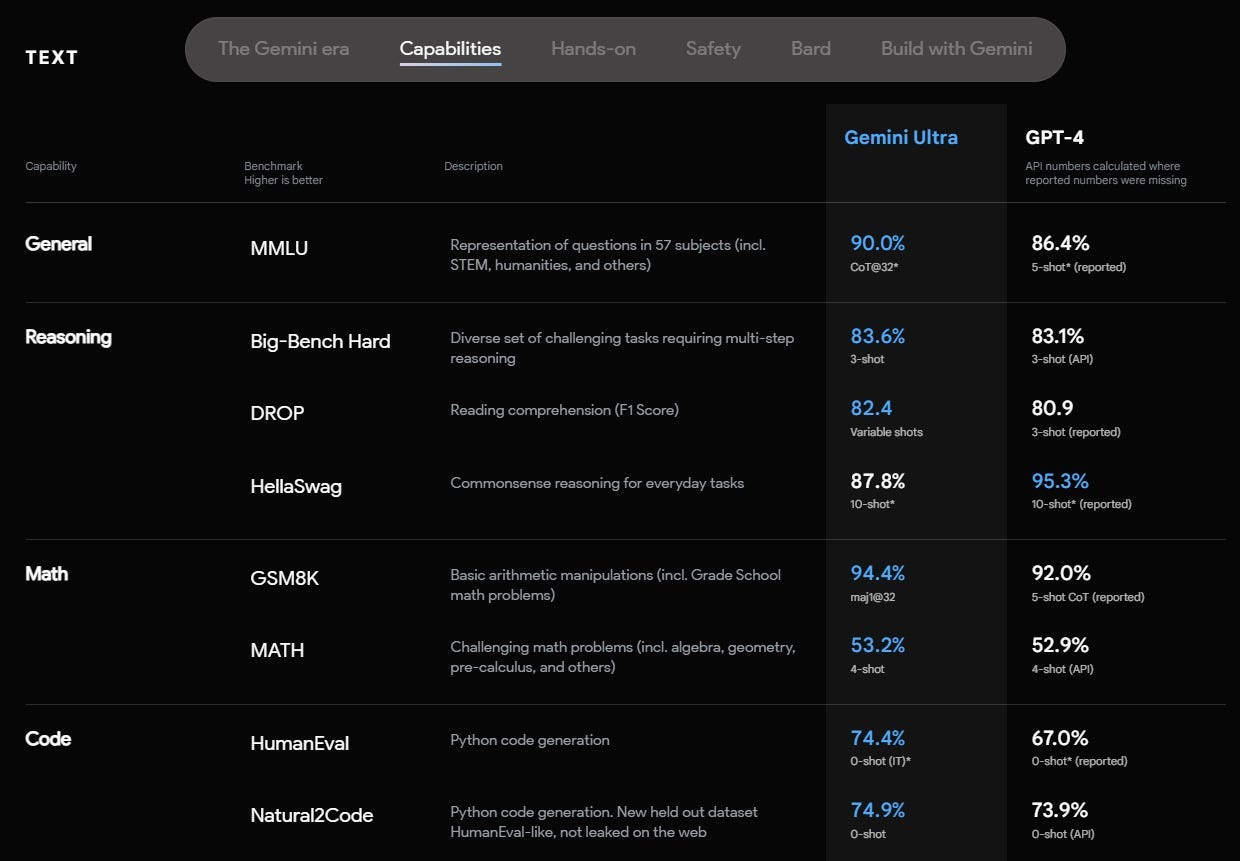

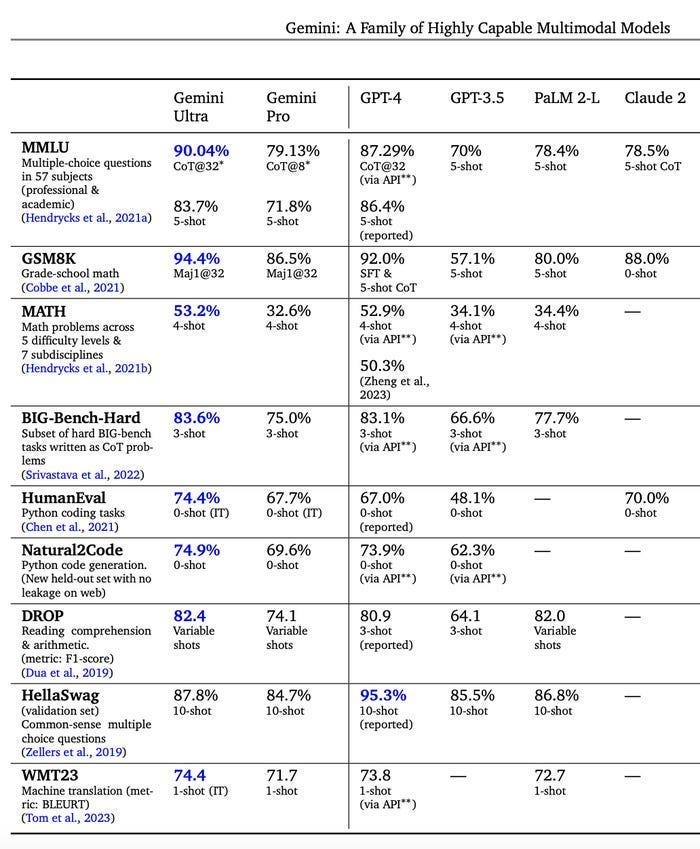

One example often cited is a comparison image where Google claims Gemini Ultra outperforms GPT-4. However, the actual performance gap may not be as significant as it seems.

You may have come across a thorough comparison between Gemini Ultra and GPT-4. This comparison shows that while Gemini Ultra does outperform GPT-4, the difference narrows when you delve into the details, particularly in a 60-page paper published by Google.

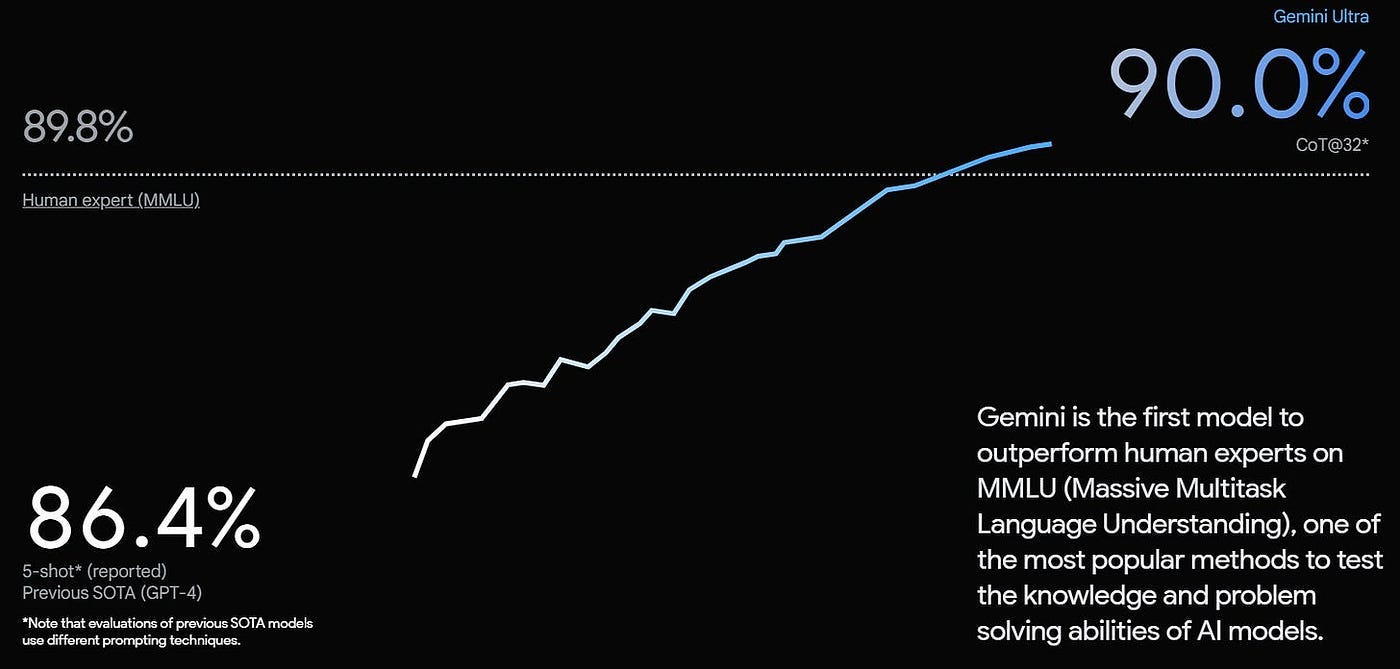

An interesting point to note is the MMLU (Massive Multitask Language Understanding) comparison. GPT-4’s score of 86.4% climbs to 87.29% when evaluated using a similar prompting technique, known as CoT@32.

Currently, the only accessible version of Gemini for users is Gemini Pro, integrated into Bard. However, this version doesn’t quite measure up to GPT-4’s capabilities.

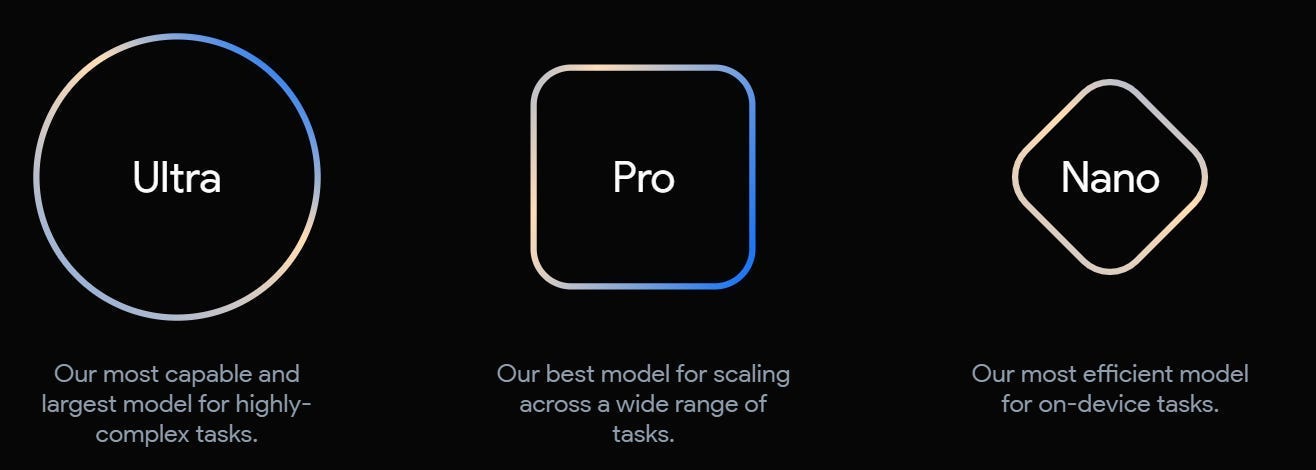

Gemini was released in three variants:

Gemini Ultra: The flagship model, boasting the highest complexity and capability, surpassing GPT-4 in certain aspects.

Gemini Pro: A versatile model aimed at a broad spectrum of tasks, positioned more as a competitor to GPT-3.5 than the more advanced GPT-4.

Gemini Nano: Designed specifically for on-device applications, optimized for efficiency and lower resource requirements.

“The Focus of Discussion, Gemini Ultra, Remains Unavailable: A Closer Look at Availability and Information Gaps”

Currently, Gemini Ultra, the model that’s the center of much discussion, is not yet accessible to the public. Its release through “Bard Advanced” is anticipated early next year.

At present, our understanding of Gemini Ultra is limited to the statistics provided by Google and a so-called “hands-on” experience with Gemini, facilitated by Google, which doesn’t offer a comprehensive insight.

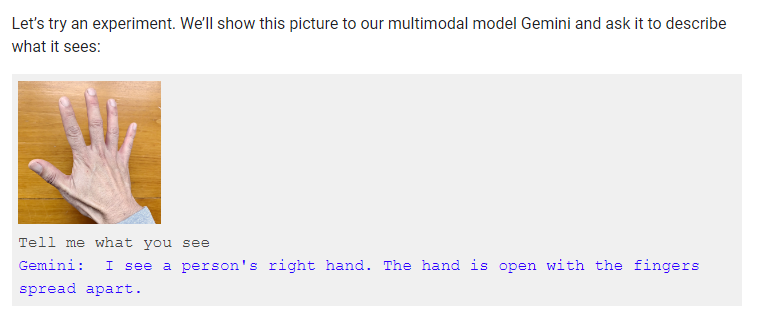

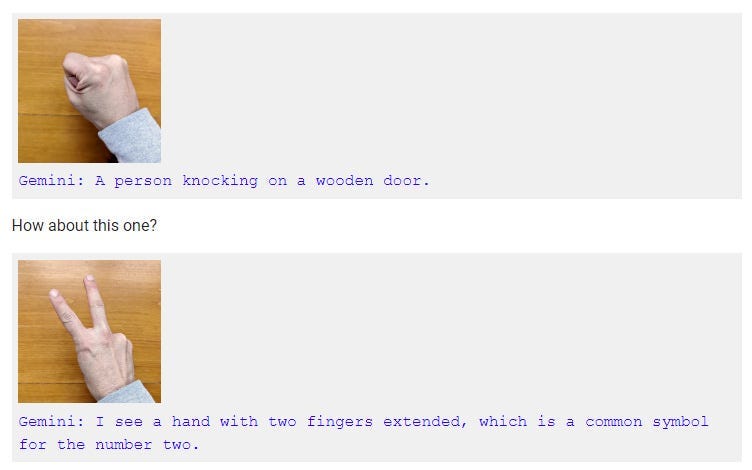

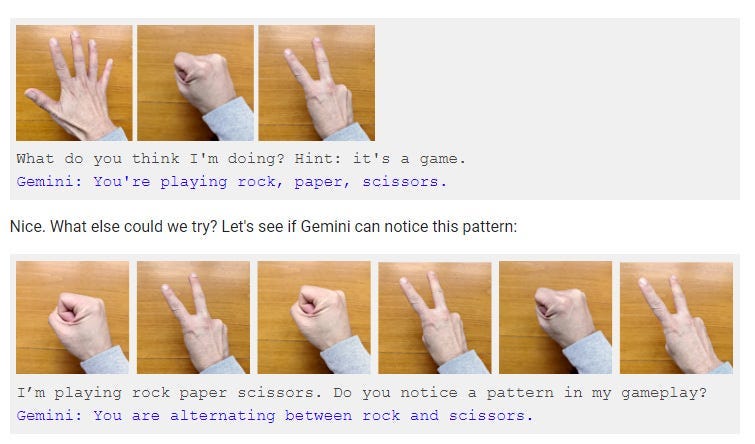

In the demonstration, Google showcases the multimodal capabilities of Gemini. It highlights features like conversational AI, rapid image recognition, real-time object tracking, and more. Indeed, it looks quite impressive until you delve deeper into the details provided in the video description.

The description reveals that for demo purposes, latency was minimized and Gemini’s responses were condensed for succinctness. This means the interactions weren’t happening in real-time, and the spoken prompts used in the video weren’t actually part of the live process.

Moreover, a Bloomberg report sheds more light on this. When queried, Google acknowledged that the video demonstration wasn’t conducted in real-time using spoken prompts. Instead, it utilized still image frames from raw footage, and text prompts were written out for Gemini to respond to.

While searching for further insights about Google’s demo, I came across a “How it’s Made” article on Google’s blog. This was an eye-opener. It turned out that what appeared to be a unique feature of Gemini compared to GPT-4 — the ability to understand and generate responses based on video input — was actually a sequence of predetermined image frames.

Let’s take a closer look at how the rock, paper, scissors clip was produced.

The disparity between the spoken prompt in the video and the written prompt used to obtain Gemini’s results is quite evident. This discrepancy leads to questions about the authenticity of Gemini’s capabilities as showcased in the demo.

The polished presentation of the demo has led some to question the true performance of Gemini Ultra. It’s hard to gauge the model’s actual effectiveness without either conducting a hands-on test personally or seeing an unedited, straightforward demonstration by someone else.

An additional point of concern is the lack of transparency regarding Gemini’s training data. As noted by several users on Twitter, Google has not disclosed details about the sourcing or filtering of the training data used for Gemini. This is particularly notable given Google’s own emphasis on the importance of quality training data in AI development.

Jeff Dean, Chief Scientist at Google DeepMind and Google Research, responded to concerns raised on Twitter about the Gemini Ultra model. The conversation highlighted the anticipation and skepticism surrounding its release.

Twitter users are eagerly waiting for public access to Gemini Ultra, hoping to verify if it truly matches its hyped capabilities. While excitement is understandable, a cautious approach is advisable given the uncertainties.

Several points fuel this caution:

- Gemini’s performance in the demo might not reflect its real-world capabilities.

- Only Gemini Pro is currently integrated with Bard, and its performance is comparable to GPT-3.5, not the more advanced GPT-4.

- Google hasn’t yet provided details about the training data used for Gemini, which is a critical aspect of AI development.

The launch of Bard earlier in 2023 serves as a cautionary tale. Despite initial enthusiasm, it faced user disappointment due to various errors, tempering expectations for new AI releases.

In conclusion, while Gemini Ultra could be as revolutionary as claimed, skepticism remains until more concrete, hands-on evaluations are available. Until then, the hype around Gemini is something I remain reserved about.

Thank you for reading this article so far, you can also get the free prompts from here.

What Will You Get?

Access to my Premium Prompts Library.

Access our News Letters to get help along your journey.

Access to our Upcoming Premium Tools for free.

Also, Check out https://www.solan-ai.com/

Subscribe My NewsLetter now!

Reply