- Solan Sync

- Posts

- Ollama Chat: The Easiest Way to Run Local AI on Your PC (Mac & Windows)

Ollama Chat: The Easiest Way to Run Local AI on Your PC (Mac & Windows)

Ollama Chat is the easiest way to run Local AI on Mac & Windows. 1-click install, chat with PDFs, RAG support, and multimodal features.

If you’ve ever dreamed of having your own private AI assistant running locally — without the hassle of Python code or command-line gymnastics — Ollama Chat is here to deliver.

The new desktop application for macOS and Windows turns Ollama from a developer-only tool into a one-click LocalGPT experience. With its graphical interface, drag-and-drop RAG support, and multimodal capabilities, it’s now one of the most beginner-friendly ways to chat with local LLMs.

In this guide, we’ll break down Ollama Chat’s features, installation process, pros & cons, and why it might be the easiest entry point into Local AI today.

What Is Ollama Chat?

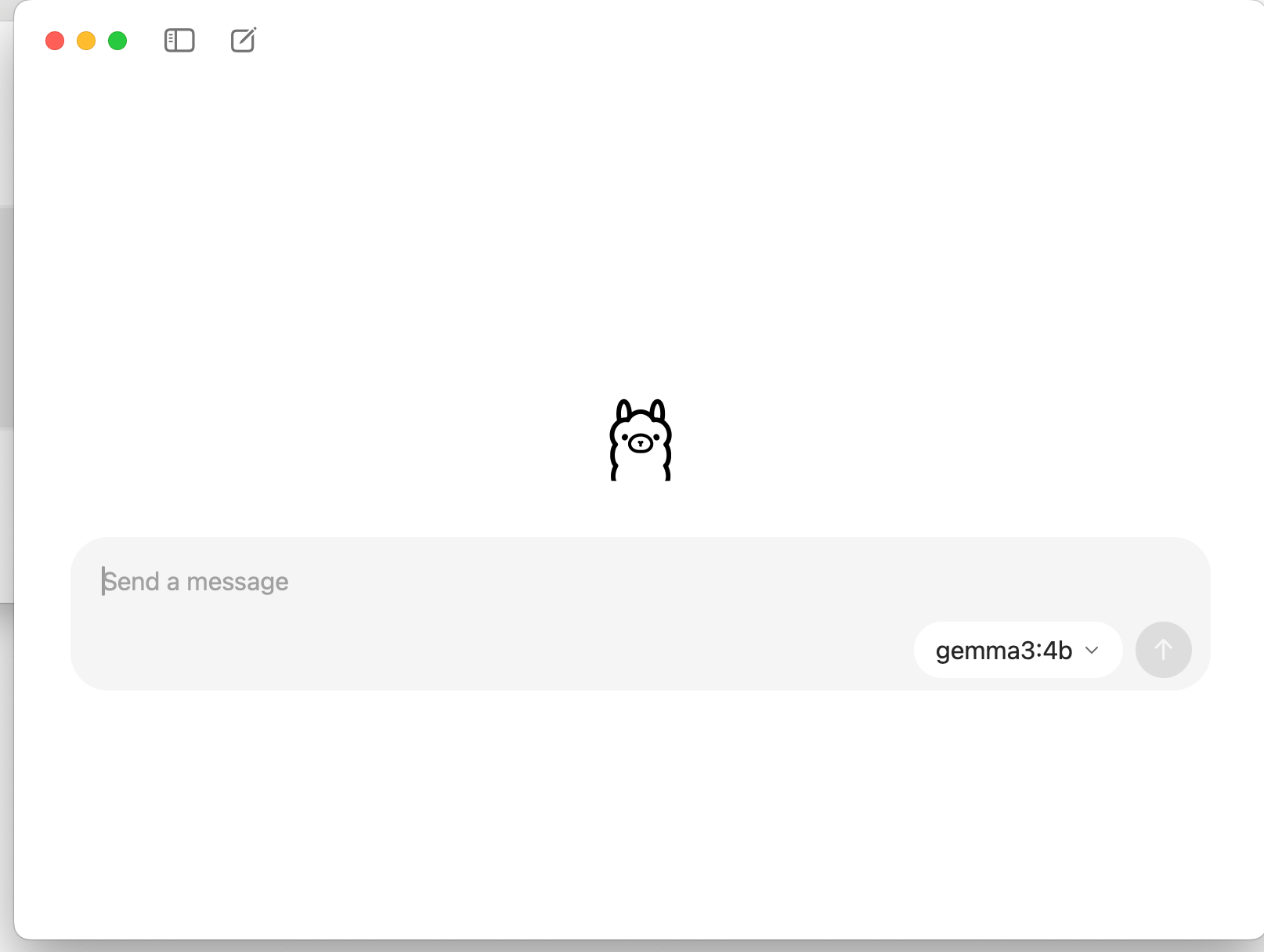

Previously, Ollama was a command-line only tool. You needed to be comfortable typing ollama run llama3 in a terminal just to start. Now, the new Ollama Chat GUI makes local AI as simple as opening an app.

Key highlights:

✅ Built-in Chat Interface — Select a model and chat instantly.

📄 File Chat (RAG) — Drop in PDFs or text files to query their content.

🔄 Adjustable Context Length — Handle bigger documents by tweaking settings.

🖼 Multimodal Support — Send images to models like Google’s Gemma 3 or Qwen3.

💻 Cross-Platform — Available on both Mac and Windows.

This makes Ollama one of the most accessible Local LLM frameworks, no GPU mastery required.

How to Install Ollama Chat (Step by Step)

Getting started takes just a few clicks:

Download the installer → Ollama Download Page

Choose your OS (macOS or Windows).

Run the installer (like any other app).

Launch Ollama Chat — it opens automatically after install.

Pick a model from the drop-down list (start small if you don’t have a GPU).

⚠️ Pro tip: If your machine doesn’t have a dedicated GPU, avoid models larger than 4B parameters.

How to Chat With PDFs and Documents

One of Ollama Chat’s biggest upgrades is RAG support:

Simply drag and drop your PDF or text file into the chat window.

Ollama automatically loads the content into the prompt.

Ask questions, summarize, or analyze the file instantly.

👉 Be sure to increase your context window in settings if you’re working with long documents.

Best Ollama Models for Local AI

Not all models are created equal. Here’s a quick cheat-sheet:

For simple chat & content:

SmolLM2–350M

Qwen2.5–0.5B

For RAG & documents (2B+ recommended):

Gemma2–2B

Llama3.1–3B-instruct

Granite3.1 series

For best balance of speed & quality:

Qwen3–4B-Instruct-2507 (latest update, faster, better formatting, improved reasoning).

Pros & Cons of Ollama Chat

👍 Strengths

1-Click Setup: No coding or CLI needed.

Beginner Friendly: Ideal entry point into Local LLMs.

RAG & Multimodal: Instantly chat with PDFs, code, and images.

Large Model Library: Wide support via Ollama Hub.

👎 Limitations

No System Prompt UI: Can’t fine-tune behavior directly in the app.

No Hyperparameter Controls: Settings like temperature or top-p are locked.

Black-Box RAG: No customization for chunking, embeddings, or retrieval.

Slower Updates: Some cutting-edge models don’t load yet.

Who Should Use Ollama Chat?

🆕 Beginners who want an easy local AI app.

📚 Researchers & students who want to chat with PDFs or academic papers.

👨💻 Developers who like GUI for quick tests, but CLI for advanced control.

🏢 Business users interested in private RAG workflows (IBM Granite series is great for this).

If you want maximum customization, you’ll still need the Ollama CLI or tools like LM Studio. But if you want simplicity and speed, Ollama Chat is hard to beat.

Final Verdict: A Game-Changer for Local AI

The Ollama Chat desktop app makes running a private AI as easy as installing Zoom or Spotify. With its drag-and-drop RAG, multimodal support, and friendly GUI, it massively lowers the entry barrier.

While power users may miss deeper customization, this trade-off is what makes it so beginner-friendly. For most users, Ollama Chat will be the fastest way to set up your own personal AI assistant, fully local, in just minutes.

👉 Ready to try it? Download Ollama Chat here.

Reply