- Solan Sync

- Posts

- OpenAI's GPT-5 Is Here

OpenAI's GPT-5 Is Here

OpenAI has rolled out GPT-5 to all ChatGPT users, unifying models into a single adaptive system with faster responses, deeper reasoning, and new tools - plus major updates from Meta and Tesla in the AI race.

OpenAI's GPT-5 Is Here

OpenAI has officially released GPT-5 across all ChatGPT tiers, replacing all legacy models including GPT-4o, o3, and o4 variants. GPT-5 introduces an adaptive system architecture featuring a fast-response base model, a deeper “GPT-5 Thinking” engine for high-complexity tasks, and an internal smart router that dynamically allocates the right model layer, removing the need for manual model selection.

Hallucination rates are reduced by up to 80% under complex prompts, and factual consistency has improved significantly. For developers, GPT-5 Pro offers expanded context windows, higher throughput, and research-level performance, exclusive to Pro and Team users. Enterprise and Edu tiers retain legacy model access for 60 days, while API access to deprecated models remains unchanged. OpenAI confirmed no immediate plans to sunset those APIs.

Six major updates to ChatGPT include:

A unified GPT-5 system replaces the model picker, with automatic routing between base and reasoning models.

“GPT-5 Thinking” mode enables deeper, high-stakes reasoning, available to Pro and Team users for advanced tasks.

Four built-in personality presets: Cynic, Robot, Listener, and Nerd allow instant control over tone and conversational style.

Improved “vibe coding” and UI layout generation create functional previews directly in Canvas from natural language inputs.

Advanced voice mode is more context-aware, customizable, and now available to all users, including support for custom GPTs.

Gmail and Google Calendar integration allows ChatGPT to summarize threads, manage tasks, and plan schedules, starting with Pro access.

Separately, OpenAI signed a $1-per-agency deal with the U.S. General Services Administration to provide ChatGPT Enterprise to federal agencies. The package includes 60 days of unrestricted model access, onboarding, and a private gov-only community. Details on deployment architecture remain vague, particularly around use of private cloud or on-prem setups, raising flags about compliance and data protection for sensitive government use cases. Read more.

Meta flexes AI and XR muscle with retina-grade headsets and emotional voice tech

Meta is turning up the heat on both mixed reality and generative AI. At SIGGRAPH 2025, it will debut three experimental headsets. The standout, Tiramisu, delivers 90 pixels per degree (3.6× Quest 3), 1,400 nits brightness (14×), and 3× the contrast, bringing VR visuals closer to photorealism. The Boba 3 series focuses on ultra-wide immersion with 180° horizontal × 120° vertical fields of view and dual 4K×4K displays per eye, using Quest 3–grade optics.

Image: Meta

On the AI front, Meta has acquired WaveForms AI, a startup building models that detect and synthesize emotional cues in speech. WaveForms, launched by ex-OpenAI and Google engineers, had secured $40M from a16z. The team joins Meta’s Superintelligence Labs, following other high-profile hires from OpenAI, Anthropic, Apple, and GitHub. Combined with Meta’s earlier buyout of Play AI and new voice lead Johan Schalkwyk (ex-Google), the company is investing heavily in emotionally responsive voice agents.

However, internal reviews show cracks in privacy practices. Contractors say users regularly share unredacted names, contact info, and explicit content with Meta’s AI chatbots, raising new alarm bells about data exposure and moderation lapses in Meta’s generative systems. Read more.

Tesla abandons Dojo, doubles down on AI5/AI6

Tesla has officially ended its Dojo supercomputer project, citing internal inefficiencies and leadership turnover, including the exit of architect Peter Bannon. Elon Musk confirmed the move, stating Tesla will no longer split efforts across disparate AI chip designs. The company is now focusing on AI5 and AI6 chips, which Musk claims will be “excellent for inference and at least pretty good for training.” Production will shift to Samsung’s fab in Taylor, Texas, with AI5 chips arriving in late 2026. Nvidia and AMD will supply interim compute, while Musk teased that clustering AI5/AI6 chips could evolve into a leaner “Dojo 3.”

Image: ARC-AGI

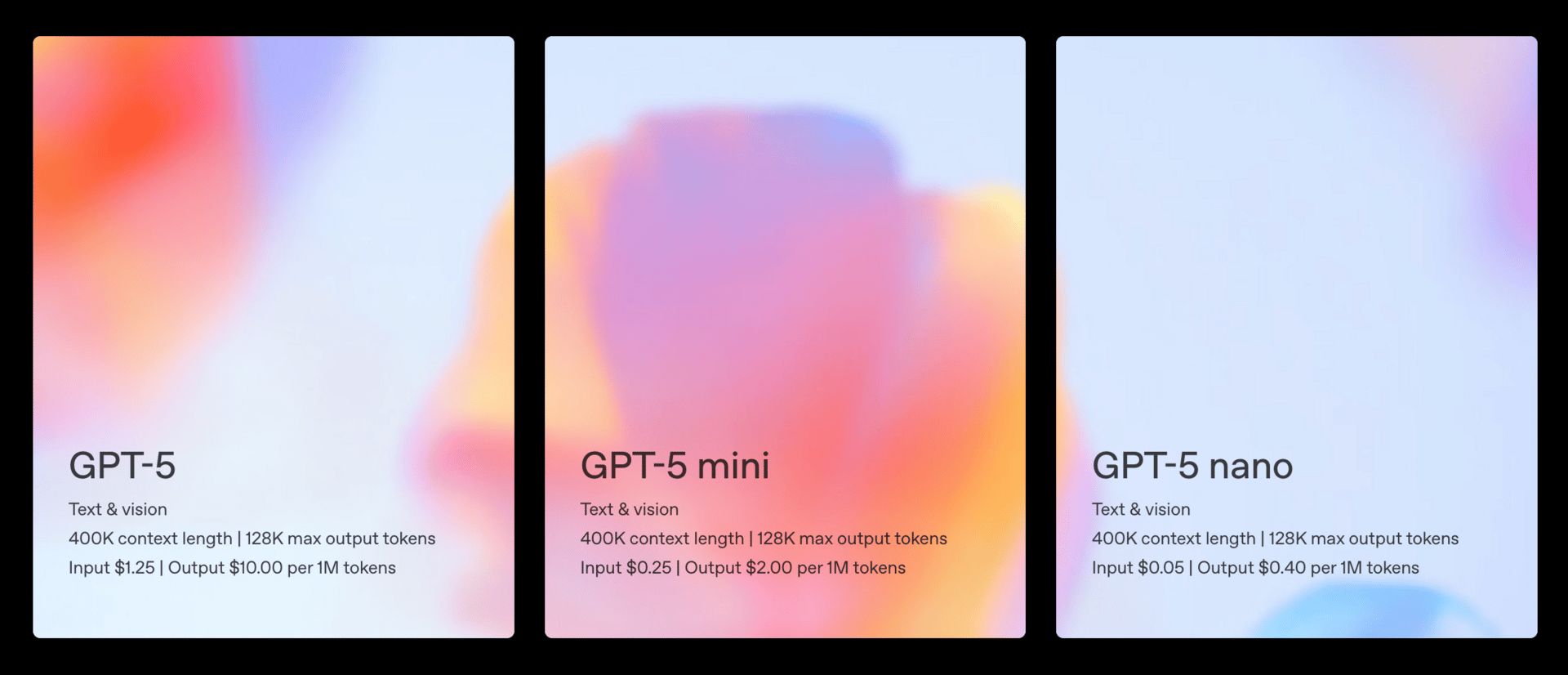

In the new ARC-AGI-2 benchmark report, Grok 4 (Thinking) still outperformed GPT-5 (High), scoring ~16% versus 9.9%, albeit at a significantly higher cost per task ($2–$4 vs. $0.73). On ARC-AGI-1, Grok 4 again led (68%) over GPT-5’s 65.7%. Lighter variants like GPT-5 Mini and Nano reflect expected trade-offs in cost and performance.

Reply